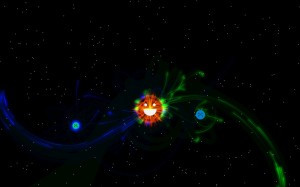

For fun, I decided to implement my own version of ray tracing in one weekend. It runs on desktop and I also have a webassembly version on this here site (use this one on iOS/Safari or if the other link doesn’t work — some browsers don’t support SharedArrayBuffer). I did a few things different with my version:

- SDL2 Rendering. The book outputs PPM files through stdout, but I realized that I didn’t have a single program that could view PPM files. A quick google search didn’t give me anything very promising. So I just went with outputting directly to the screen with SDL. It was a little bit more upfront work, but it made debugging a lot easier later on.

- Dear IMGUI for the builtin UI. This also makes it a lot easier to test things, since you don’t have to shutdown the program to change various variables and see how it effects the output. I have to say, I’ve always wanted to use IMGUI in a project but never had a chance. It’s now one of my favorite libraries. I know the limitations of Immediate Mode Gui’s, but it’s definitely nice not having to pull in the heft of something like Qt or Gtk.

- GLM for math instead of custom math classes. I just didn’t feel the need to reinvent the wheel here, especially since GLM is very optimized and header-only.

- Multi-threading. C++ has come a long way here, I remember in the past using the OS libraries and having a miserable time. Amazingly, using std::thread and std::atomic I got threading working in a day without any significant bugs. Which, since I never trust threading, I’m sure there’s something lurking in there, but so far everything has been smooth. I haven’t done any optimizations for eliminating false sharing on cache lines or anything like that, but so far the speedup seems to be roughly linear, IE, 12 threads is about 12x faster than one thread.

- Emscripten for web assembly. Fortunately Emscripten has very good SDL2 support, and surprisingly, it can even do multithreading. The only downside with its multithreading is that due to browser security issues, you have to serve up HTTP headers that significantly restrict what’s allowed on the page. So I had to figure out how to modify apache configurations in order to get it online. Overall it wasn’t that hard though.

- CMake for cross platform build support (tested w/ Visual Studio 2022, XCode, web assembly). I have mixed feelings about CMake. On one hand, it’s an incredibly useful tool, and I have no interest in maintaining a lot of separate project files so it’s great in that regard. On the other hand, I absolutely hate the syntax of its custom language. I’m still baffled as to why they couldn’t have just used a scripting language like lua or javascript or python. CMake’s syntax is just weird, things like “else” look like function calls, it’s not clear when you’re supposed to quote things and when you’re not supposed to. It’s also not clear when the order of function calls matters and when it doesn’t, or if they’re function calls or some sort of declaration. I’m reminded of the old Bjarne Stroustrup quote: “There are only two kinds of languages: the ones people complain about and the ones nobody uses.” Replace language with tools and I think it applies to CMake also.

Anyway, in terms of future goals, what I’m planning next for this is:

- glTF mesh/scene support

- Lighting/Emissive materials

- Textures

- PBR materials

- Optimize ray hit detection with something like octrees (haven’t really decided on a data structure yet)

- Maybe a React UI for the web version (although I really like IMGUI even there)